Gods of suffering and oppression

Lincoln Cannon

31 January 2011 (updated 3 January 2026)

In response to the New God Argument, an anonymous commenter requested my thoughts on the following David Pearce quote. David is a Transhumanist philosopher who I respect a great deal, despite some differences of perspective. He calls into question the benevolence of posthumans:

“Of all the immense range of alternative activities that future Superbeings might undertake - most presumably inconceivable to us - running ancestor-simulations is one theoretical possibility in a vast state-space of options. On the one hand, posthumans could opt to run paradises for the artificial lifeforms they evolve or create. Presumably they can engineer such heavenly magic for themselves. But for SA purposes, we must imagine that (some of) our successors elect to run malware: to program and replay all the errors, horrors and follies of their distant evolutionary past - possibly in all its classically inequivalent histories, assuming universal QM and maximally faithful ancestor-simulations: there is no unique classical ancestral history in QM.

“But why would posthumans decide to do this? Are our Simulators supposed to be ignorant of the implications of what they are doing - like dysfunctional children who can’t look after their pets?

“Even the superficial plausibility of ‘running an ancestor-simulation’ depends on the description under which the choice is posed. This plausibility evaporates when the option is rephrased. Compare the referentially equivalent question: are our posthuman descendants likely to recreate/emulate Auschwitz? AIDS? Ageing? Torture? Slavery? Child-abuse? Rape? Witch-burning? Genocide? Today a sociopath who announced he planned to stage a terrorist attack in the guise of “running an ancestor-simulation” would be locked up, not given a research grant.

“SA invites us to consider the possibility that the Holocaust and daily small-scale horrors will be recreated in future, at least on our local chronology - a grotesque echo of Nietzschean ‘eternal recurrence’ in digital guise. Worse, since such simulations are so computationally cheap, even the most bestial acts may be re-enacted an untold multitude of times by premeditated posthuman design. It is this hypothetical abundance of computational copies that lends SA’s proposal that one may be living in a simulation its argumentative bite.

“At least the traditional Judeo-Christian Deity was supposed to be benevolent, albeit in defiance of the empirical evidence and discrepancies in the Biblical text. But any Creator/Simulator who opts to run prerecorded ancestor-simulations presumably knows of the deceit practised on the sentient beings it simulates. If the Simulators have indeed deceived us on this score, then what can we be expected to know of unsimulated Reality that transcends our simulation? What trans-simulation linguistic apparatus of meaning and reference can we devise to speak of what our Deceiver(s) are purportedly up to?

“Intuitively, one might suppose posthumans may be running copies of us because they find ancestral Darwinian life interesting in some way. After all, we experiment on ‘inferior’ non-human animals and untermenschen with whom we share a common ancestry. Might not intellectual curiosity entitle superintelligent beings to treat us in like manner? Or perhaps observing our antics somehow amuses our Simulators - if the homely dramaturgical metaphor really makes any sense. Or perhaps they just enjoy running snuff movies.

“Yet this whole approach seems misconceived. It treats posthumans as though they were akin to classical Greek gods - just larger-than-life versions of ourselves. Even if advanced beings were to behave in such a manner, would they really choose to create simulated beings that suffered - as distinct from formally simulating their ancestral behaviour in the way we computationally simulate the weather?”

First, as context, only one of the three conclusions of the New God Argument depends on ideas related to benevolence, which are the focus of David’s remarks. The conclusions that we should trust in the present existence of posthumans, and that we should trust that posthumans probably created out world, stand logically independent of the conclusion that we should trust posthumans probably are more benevolent than us.

Thus, if David’s criticism were to defeat the one conclusion, without establishing any weaknesses in the assumptions from which the other conclusions arise, we would still find ourselves in the position of acknowledging a practical or moral obligation to trust in the present existence of posthumans that created our world. But we would no longer need to acknowledge any practical or moral obligation to trust that our creators are more benevolent than us. That’s not a logically contradictory position, and it’s not a new position either. In fact, such a position is at least as old as branches of early Christian Gnosticism that held our creator to be a malicious tyrant from whom the Christ would save us.

Second, also as context, it continues to fascinate me that it’s primarily among Transhumanists that the assumptions related to the Benevolence Argument are the most controversial of the assumptions of the New God Argument. Outside Transhumanist circles, I get more skeptical feedback on assumptions related to the Angel and Creation Arguments. Of course, one of the reasons for the difference is that the assumptions on which the Angel and Creation Arguments are based have been well rehearsed and championed by popular Transhumanist thinkers like Nick Bostrom and Robin Hanson. Another reason, not often acknowledged, is the dominance of anti-theistic emotion (not just reason) among Transhumanists, which inclines some of us to focus on and react to the problem of evil rather than the actual assumptions of the Benevolence Argument.

That leads to my third contextual note, which is that the problem of evil is not directly relevant to the New God Argument, either as a whole or even when considering the Benevolence Argument in particular. The logical problem of evil is applicable only to the hypothesis of a God of superlative power and benevolence. In contrast to an ontological argument for that than which nothing greater can be conceived, the New God Argument concerns itself only with posthumans that are greater than we can now conceive.

So what, then, of the practical problem of evil? Shouldn’t even a non-superlative posthuman be capable of creating worlds less evil than that we now experience? Maybe. And that’s a question that quite literally keeps me up at night on occasion. But it’s also beside the point when considering the initial merits of the New God Argument.

The argument doesn’t assume posthuman benevolence. It doesn’t even conclude posthuman benevolence. Rather, it concludes we should TRUST that posthumans are MORE benevolent than us. And it bases this conclusion on the practical and moral ramifications of trusting that we will survive our own increasingly risky technological power.

There are no sophistic appeals to any privileged view of inherent morality in the natural order of things. Quite to the contrary, the New God Argument explicitly acknowledges an increasing risk of unjustifiable evils and attributes our ongoing flourishing in the face of those risks to a combination of increasing defensive capacity and benevolence.

So can civilizations survive the risks inherent in biological evolution and accelerating technological change without becoming increasingly benevolent? Can increasing defensive capacity alone suffice? These are the relevant questions. If we answer the latter question in the negative (which I think we have good reason to do) then the practical problem of evil, insofar as it’s assertive, is actually a pessimistic answer to the former question. It implies that posthumans do not exist, and therefore we probably will go extinct before becoming posthumans.

In that context, then, I’ll explain how my perspective differs from that advocated by David. He advocates abolitionism, which is the idea that suffering can be wholly or at least largely eradicated by posthumans – I hope that short characterization does him no injustice. I consider the idea to be noble in intention. However, in application, I can imagine it only as the worst kind of oppression.

While I certainly do not advocate suffering, I cannot conceive of the possibility of meaningful experience in a world that does not allow for suffering. Meaning, even in its most basic forms as discernment or sensing or interaction, arises from the capacity to distinguish or categorize or react. So long as we can do these things, we will contrast suffering from enjoyment, pain from pleasure, more desired from less desired, more empowering from less empowering, even as we invent whole new modes of experience on which to apply these categories.

Our present experience of pain and pleasure is not arbitrary. But rather it’s the product of billions of years of evolution, which presumably continues to tend toward optimizing the amount of suffering we can and do experience insofar as it benefits survival and reproduction. In a world without sensory feedback that is sufficiently poignant to motivate the degree of seriousness that we now attribute to suffering, why would we expect anything more than the level of intelligence we see in simple organisms? Even the amazing narrow super-intelligence of modern computers wouldn’t survive more than a few weeks without the abiding concern of creatures like humans, motivated enough by their pains and pleasures, and higher level desires and wills and frustrations, to overcome apathy and pursue empowerment.

I do think we have, and will increasingly have, the power to eradicate suffering. However, using that power will not always be the right thing to do. The only way to eliminate all suffering is to eliminate all experience, which is the ultimate form of nihilism – well beyond mere questions of morality. Partial eliminations of suffering come with various costs and benefits, and different persons will measure them differently.

Although there is certainly an extent to which we as a community should seek to limit each other, there is also an extent to which we should seek to relinquish each other. There is an extent to which we should allow others to risk suffering in pursuit of empowerment. To prevent their risking when their pursuit is not oppressive is the essence of immorality. It is stealing that which another has created, even murdering that which is another’s life.

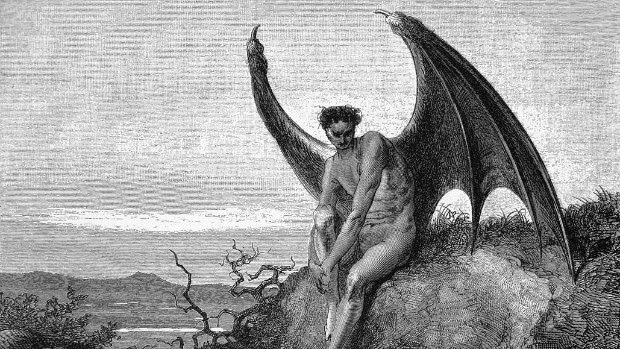

In the Mormon tradition, we tell of a pre-mortal war in heaven. The war, fought by all the spirit children of God, began with two opposing proposals regarding the creation of the world. On the one hand, Christ proposed relinquishing creation to permit moral agency, with its inherent experiences of misery and joy, so that we would have the possibility to become like God. On the other, Satan proposed controlling creation, preserving it from any risk of suffering or loss.

The battle did not end in the pre-mortal heaven. It’s still being fought as we procreate. It’s still being fought as we take early steps toward creating our own spirit children – artificial intelligence. It’s still being fought as we debate whether and to what extent we should relinquish and empower our children to become as we are.

Should we allow our children to suffer? How much should we limit the risk? Should we allow the risk to increase indefinitely over time? Or should we altogether reject further propagation of the mode of existence that we have known?

As the mythology goes, all who have lived and now live in this world accepted in the pre-mortal world the proposal of Christ, to risk suffering for the opportunity to become as God. I don’t think we should change our minds. And I welcome the expression of opinions to the contrary. In particular, I’m sure I have much to learn from persons like David Pearce.