Living in an undecidable halting problem of evil?

Lincoln Cannon

9 May 2011 (updated 3 January 2026)

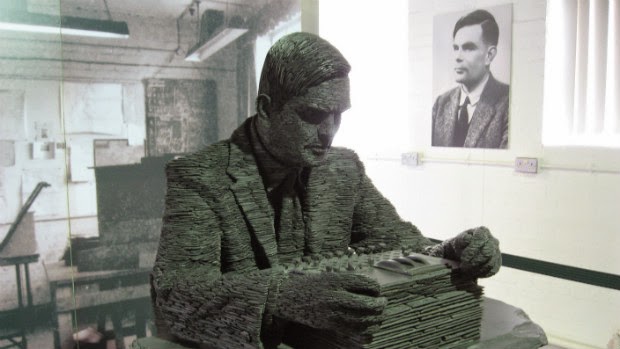

For some computer programs, we can know in advance how they will run, when they will stop, and what results they will return. However, there are other computer programs that are undecidable halting problems: we cannot know, without actually running them, whether they will ever stop running, let alone what results they will return.

While reading New Scientist on “the limits of knowledge,” I came across a reference to Gregory Chaitin, who is doing some intriguing work in a field he calls “metabiology,” applying to biology the lessons we’ve learned about the limits of computation. It appears that one of his hypotheses is that evolution is an undecidable halting problem, infinitely long and irreducibly complex.

Gregory’s hypothesis is compatible with my views of theology and theodicy. Maybe we’re living in a posthuman-computed world (which we should trust to be the case if we trust that we or our descendants will eventually compute many worlds like our own). And maybe the posthuman-computer wants to make more and better posthuman-computers.

If so, our world may be one of many undecidable halting problems, or one of many variations of a single undecidable halting problem, that the posthuman-computer spawned with slight variation from parameters that have proven promising in the past. One consequence of this would be that our posthuman-computer simply cannot attain its desired results for our world without actually running our world, evil and all.

It seems there’s no meaningful way to create creators without relinquishing creations. Is it possible to relinquish computed creations without incorporating into them undecidable halting problems? Can we create artificial general intelligence without undecidable halting problems? Can we create artificial general intelligence that does not experience evil?