Speculating Around Speculative Singularity Roadblocks

Lincoln Cannon

11 July 2011 (updated 9 February 2026)

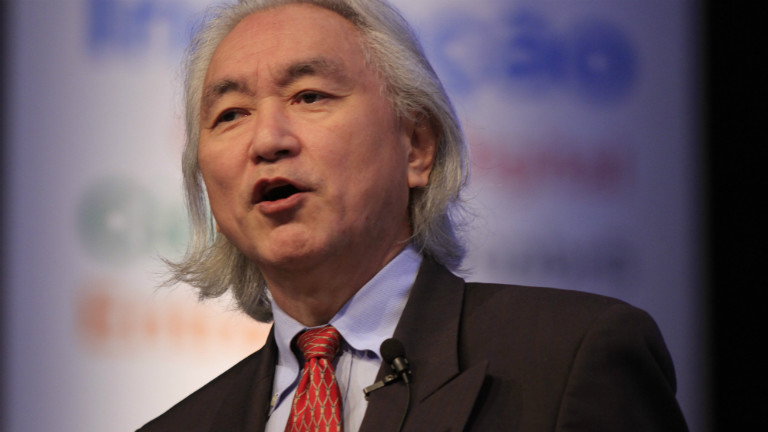

In his book, Physics of the Future, Michio Kaku outlines six roadblocks to the Singularity. The roadblocks are at least as speculative as the technological singularity. And we can reasonably speculate our way around them. Below are Michio’s proposed roadblocks, indented, followed by my thoughts.

“First, the dazzling advances in computer technology have been due to Moore’s law. These advances will begin to slow down and might even stop around 2020-2025, so it is not clear if we can reliably calculate the speed of computers beyond that …”

The idea that advances in computing tech will slow down (or stop) in 2020-2025 is no more warranted than these skeptical and luddite ideas. The facts of the matter are:

- We have observed an exponential advance in many aspects of computing tech for many years.

- Different persons point to different reasons to predict the trend will continue (or not) in the future.

I suspect the trend will continue for a long time. But even if it continues only for a few more decades, we’re in for an unprecedented wild ride.

“Second, even if a computer can calculate at fantastic speeds like 10^16 calculations per second, this does not necessarily mean that it is smarter than us … Even if computers begin to match the computing speed of the brain, they will still lack the necessary software and programming to make everything work. Matching the computing speed of the brain is just the humble beginning.”

Even if humans calculate slower than computers, this does not mean humans are smarter than computers. Speed of calculation is an aspect of intelligence, as are quantity of calculation and algorithms. Computers already are beating humans in each of these areas in various ways and to various extents. Did you watch Watson win Jeopardy?

“Third, even if intelligent robots are possible, it is not clear if a robot can make a copy of itself that is smarter than the original … John von Neumann … pioneered the question of determining the minimum number of assumptions before a machine could create a copy of itself. However, he never addressed the question of whether a robot can make a copy of itself that is smarter than it … Certainly , a robot might be able to create a copy of itself with more memory and processing ability by simply upgrading and adding more chips. But does this mean the copy is smarter, or just faster …”

A robot doesn’t need to make a copy of itself that is more intelligent. It only needs to become more intelligent and make copies of itself. Robots can already make copies of themselves. Robots can already learn. We should expect their abilities in these areas to continue to improve.

In the least, if we (and robots) continue to improve algorithms, robots will make themselves smarter the same way that humans became smarter: evolution. The principles and creative power of natural selection apply to technological evolution. And the technological cycle of inheritance-variation-selection is orders of magnitude faster than biological evolution.

“Fourth, although hardware may progress exponentially, software may not … Engineering progress often grows exponentially … [but] if we look at the history of basic research, from Newton to Einstein to the present day, we see that punctuated equilibrium more accurately describes the way in which progress is made.”

Software is already progressing at faster than exponential rates. Despite bureaucracy, even the government reports algorithms are beating Moore’s Law.

“Fifth, … the research for reverse engineering the brain, the staggering cost and sheer size of the project will probably delay it into the middle of this century. And then making sense of all this data may take many more decades, pushing the final reverse engineering of the brain to late in this century.”

Progress in reverse engineering the human brain will probably match our experience with mapping the human genome. It will have a slow beginning and a fast ending, as tools continue to improve exponentially. Because of the nature of exponentials, even if estimates of the complexity of the human brain are off by a few orders of magnitude, the additional amount of time required will be measured in decades – not centuries or millennia.

“Sixth, there probably won’t be a ‘big bang’, when machines suddenly become conscious … there is a spectrum of consciousness. Machines will slowly climb up this scale.”

Machines may already be conscious to some extent. How do you know I’m conscious? Prove it.

Institute for Ethics and Emerging Technologies later republished this article.